Understanding 3DMark results from the iPhone 5s and iPad Air

November 4, 2013

This post is longer and more technical than usual, which may be a good or a bad thing depending on your point of view! Today, we are answering the question,

Why does the iPhone 5s score the same as the iPhone 5 in the 3DMark Ice Storm Physics test when it is supposed to be twice as powerful?

The 3DMark benchmark test for mobile devices is called Ice Storm. It is available on Windows, Windows RT, Android and iOS, and scores can be compared across platforms.

Ice Storm contains two Graphics tests for measuring GPU performance and a Physics test for measuring CPU performance. These scores are combined to give an overall 3DMark Ice Storm score for a device.

Ice Storm Unlimited is a version of the Ice Storm test than can be used to make chip-to-chip comparisons of CPUs and GPUs without vertical sync, display resolution scaling and other operating system factors affecting the result.

What's inside the iPhone 5s?

The iPhone 5s is Apple's new top of the range smartphone. At its heart is a chip called the A7 which has a 64-bit architecture, a first for smartphones. On their website Apple says that the A7 chip in the iPhone 5s delivers CPU and graphics performance up to 2x faster than the A6 chip in the iPhone 5.

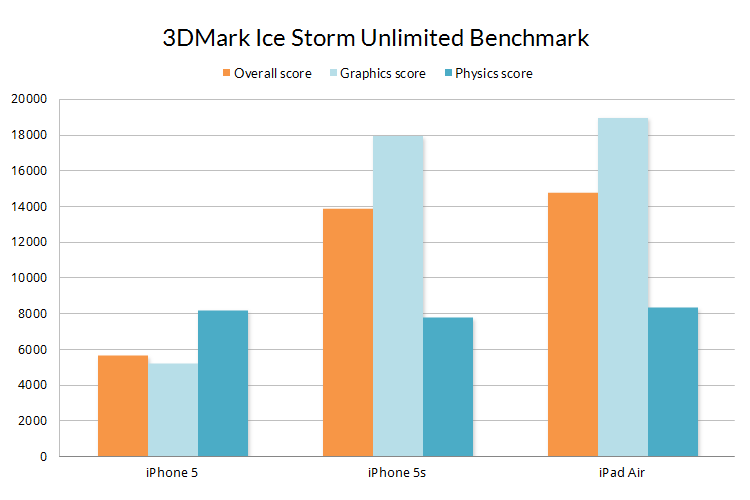

When reviews of the new Apple iPhone 5s started appearing, however, some reviewers noticed something unexpected in their 3DMark results. Although the Ice Storm Unlimited score and the Graphics score are more than twice those of the iPhone 5, the Physics score is practically the same.

Scores are averages of all results received from 3DMark users. Running apps in the background, listening to music, downloading data, or charging the device while running the benchmark will negatively affect the benchmark score.

These results were just as surprising to us so we immediately set out to understand why the Physics score was the same for both devices. It's worth noting that the A6 and A7 are both dual-core chips clocked at 1.3 GHz, (the iPad Air has an A7 running at 1.4 GHz). Any performance differences between the A6 and A7 chips would have to come from differences in architecture rather than raw speed.

Before we get into the details, it is necessary to explain the philosophy and design behind 3DMark benchmarks.

What does 3DMark Ice Storm Physics test measure?

3DMark is designed to benchmark real-world gaming performance. 3DMark tests are meticulously designed to mirror the content and techniques used by developers and artists to create games.

To that end, the 3DMark Ice Storm Physics test uses the Bullet Physics Library. Bullet is an open source physics engine that is used in Grand Theft Auto V, Trials HD and many other popular games on Playstation 3, Xbox 360, Nintendo Wii, PC, Android and iPhone.

The purpose of the Physics test is to measure the CPU's ability to calculate complex physics simulations. The Ice Storm Physics test has four simulated worlds. Each world has two soft bodies and two rigid bodies colliding with each other. This workload is similar to the demands placed on the CPU by many popular physics-based puzzle, platform and racing games.

64-bit is not the answer

We started our investigation by compiling a 64-bit version of 3DMark, since the version available in the App Store is 32-bit in order to be compatible with older Apple devices.

Once we had a 64-bit build we tested an iPhone 5s under controlled conditions in our Test Lab. As expected, we found that the results from the 64-bit version were similar to the 32-bit version, with only a 7 percent improvement. That is not enough to change the ranking of the iPhone 5s in our Best Mobile Devices list.

With that basic check out of the way, it was time to dive deep into the code to see what was happening at the hardware level. This is where things get interesting, but also more technical, so please bear with us.

Bullet time

The majority of CPU time in the Physics test is spent in Bullet's soft body solver, PSolve_links(). We made a standalone application with code that mimicked PSolve_links() in order to isolate that function from everything else in the benchmark.

When this standalone app was benchmarked we saw a large speed increase on the iPhone 5s over the iPhone 5. This was a surprise, so we spent a few days trying to understand what was happening.

The crucial difference between our test app and the Bullet implementation was the way the simulation's data structure was arranged in memory.

Links and Nodes

In simple terms, Bullet's btSoftBody object includes an array of Nodes and Links that describe the physical properties of the object. We found that when the node and link information was written to memory so that the A7 CPU could access it in a sequential fashion, as in our test app, we saw an increase in speed. The increase was higher when the A7 could run up the memory, and a bit lower when it ran down.

The way Bullet places the data in memory is more random. The A7 could not read from the memory as quickly when it had to jump back and forth to find what it needed.

To understand this better, it can help to think of device memory as a book. When the words in a book are arranged in order on the page you can read quickly. If the words of the story are scattered randomly throughout the book, however, your reading speed will be much slower.

The key difference is that the A6 doesn't seem to mind whether the data is in or out of order. It has broadly the same performance in both cases. The A7, however, is much faster when the data is in order. When the data is out of order, it performs no better than the A6.

How fast is the A7 chip? It depends.

While analysing the data from our test app, we found a data dependency in Bullet's simulation that also has an impact on the A7's CPU performance.

The way Bullet stores the data for soft bodies results in a number of cases where adjacent links share a common node. As the PSolve_Links() function reads and writes nodes as it iterates over each link there is a data dependency between links that have a node in common. Iteration X updates the position of the node, which means that iteration X+1 cannot be calculated until the position of the shared node is updated to memory.

This memory dependency limits the ability of the A7 to speculate beyond a certain point because it has to respect a possible dependency. This prevents the CPU from making full use of its out-of-order resources.

Returning to our book analogy, it is like trying to read a book while waiting for it to be written!

Does it make a difference?

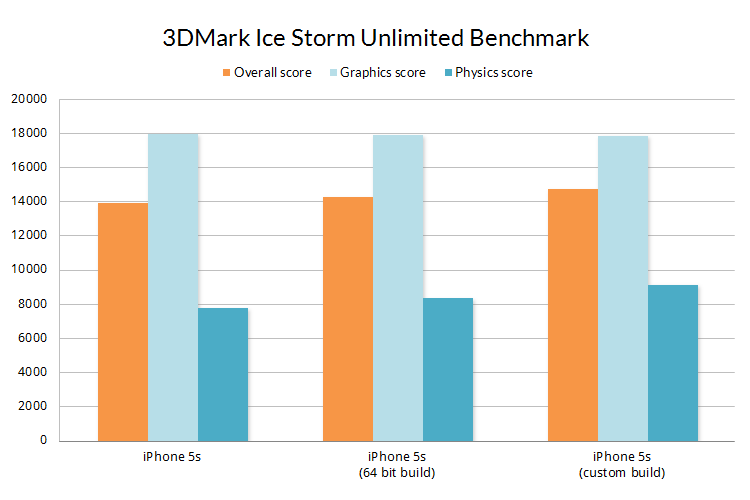

We continued our investigation by creating a custom build of 3DMark using a modified Bullet Physics function that streamlined how the data was written to memory and reduced data dependencies.

You can see the results from this custom build in the chart below. The Physics score improved by 17 percent, pulling the overall score up with it.

Scores for iPhone 5s are averages of all results received from 3DMark users. Scores for iPhone 5s (64 bit build) and iPhone 5s (custom build) are from tests conducted under controlled conditions in Futuremark's Test Lab.

In our custom build, the links were reordered in the data structure to minimize the cases where adjacent links shared a common node. This creates a gap between the time when a node position is updated and when it is next read, which in turn allows the CPU to continue execution without risking a dependency violation.

Reordering the links, together with arranging them sequentially in memory, results in speed increases for the A7 in our 3DMark custom build. However, the iPhone 5s would only gain a couple of places in our Best Mobile Devices list with this build and would still trail behind the latest Snapdragon 800 devices.

There was no performance benefit when we tested the iPhone 5 with our custom build. What's more, we found that performance decreased slightly on some Android devices due to the extra processing steps required to reorder the links.

Out of the lab, into the world

Physics simulations rely on complex, interlinked data structures. Arranging for such structures to be stored sequentially in memory with minimal dependencies is non-trivial to say the least. Our experiment worked for us because we knew exactly what data we would be using.

It is our opinion that developers coding mobile games would not normally spend time optimizing the location of memory segments, especially if the gains were relatively small and would only benefit a limited number of devices.

There are other tasks, such as image processing and file compression, that are less likely to have data dependencies and are more suited to reading data sequentially from memory. This can also be the case for artificial benchmarks that simply loop a math function or low level graphics call for a few seconds to estimate performance.

Summary

In 3DMark Ice Storm Unlimited, the iPhone 5s scores more than twice the iPhone 5. (13901 vs 5648)

In the Graphics test, which measures GPU performance, the A7 in the iPhone 5s achieves a score more than three times higher than the A6 in the iPhone 5. (17961 vs 5191)

In the Physics test, which measures CPU performance, there is little difference between the iPhone 5s and the iPhone 5. (7783 vs 8197)

Physics simulations, even in seemingly simple games, generate complex data structures. The way these structures are stored in memory can limit the CPU performance of the A7 chip.

The A7's CPU is no faster than the A6 when dealing with non-sequential data structures with memory dependencies as used in the Ice Storm Physics test.

The A7's CPU can significantly outperform the A6 for other tasks with less complex data structures. However, we believe that benchmarking with complex tests that mirror real-world scenarios is more useful than measuring performance with simplistic low-level tests.

Using a modified version of the Bullet Physics Library that optimized the data structures resulted in a 17% improvement in performance on the iPhone 5s, no change on the iPhone 5, and a slight decrease for some Qualcomm powered Android devices.

It is our opinion that a typical developer creating a cross-platform mobile game would not spend time trying to optimize memory allocation for a relatively small performance gain on a limited number of devices.

We do not make optimizations to our benchmarks that would primarily benefit a single device, chip or architecture since the purpose of a benchmark is to test hardware using the same content and settings on every device.

The Bullet Physics Library is open source. Any optimizations accepted into the main branch are likely to be included in our next 3DMark test, however, we are not planning to make changes to 3DMark Ice Storm as a result of this analysis.

Frequently asked questions

Over the last few weeks we've been asked many questions about 3DMark and the iPhone 5s. Here we answer the most common ones for everyone's benefit.

Can I trust 3DMark Ice Storm Physics test results for the iPhone 5s and iPad Air?

Yes. 3DMark is designed to benchmark real world gaming performance. The Physics test uses an open source physics library that is used in Grand Theft Auto V, Trials HD and many other best-selling games for PC, console and mobile. Higher scores in 3DMark Ice Storm Physics test directly translate into improved performance in games that use the Bullet Physics Library and are a good indicator of improved performance in other games.

Is it fair to benchmark a 64-bit device with a 32-bit benchmark?

In our test lab the score from a 64-bit version of 3DMark Ice Storm was only 7 percent better than the 32-bit app. That is not enough to change the ranking of the iPhone 5s in our Best Mobile Devices list.

Even so, we are planning to release a 64-bit version of 3DMark. It will be available in the App Store soon.

Are you going to optimize 3DMark for the iPhone 5s and iPad Air?

Using a modified version of the Bullet Physics Library we were able to improve performance on the iPhone 5s by 17 percent. The iPhone 5s would only gain a couple of places in our Best Mobile Devices list from this optimization and would still trail behind the latest Snapdragon 800 devices.

Furthermore, there was no improvement on the iPhone 5 with the modified test and a very small decrease in performance on Qualcomm powered Android devices.

We do not make optimizations to our benchmarks that would primarily benefit a single device, chip or architecture since the purpose of a benchmark is to test hardware using the same content and settings on every device.

The Bullet Physics Library is open source. Any optimizations accepted into the main branch are likely to be included in our next 3DMark test, however, we are not planning to make changes to 3DMark Ice Storm as a result of this analysis.

What does Apple say?

We shared our code and data with Apple as part of our investigation. Our contacts were very helpful and confirmed our results with their own tests.

I have another question!

You can ask us questions in our forum.

Recent news

-

New Procyon AI Benchmark for Macs now available

June 25, 2025

-

3DMark for macOS available now!

June 12, 2025

-

New Inference Engines now available in Procyon

May 1, 2025

-

Try out NVIDIA DLSS 4 in 3DMark

January 30, 2025

-

Test LLM performance with the Procyon AI Text Generation Benchmark

December 9, 2024

-

New DirectStorage test available in 3DMark

December 4, 2024

-

New Opacity Micromap test now in 3DMark for Android

October 9, 2024

-

NPUs now supported by Procyon AI Image Generation

September 6, 2024

-

Test the latest version of Intel XeSS in 3DMark

September 3, 2024

-

Introducing the Procyon Battery Consumption Benchmark

June 6, 2024

-

3DMark Steel Nomad is out now!

May 21, 2024

-

Procyon AI Inference now available on macOS

April 8, 2024

-

Procyon AI Image Generation Benchmark Now Available

March 25, 2024

-

Announcing the Procyon AI Image Generation Benchmark

March 21, 2024

-

3DMark Steel Nomad will be free for all 3DMark users.

December 20, 2023